Best AI Code Review Tool for GitHub, GitLab & Bitbucket

A hands‑on look at how CodeRabbit automates the boring checks so you can ship faster without cutting corners.

Hey everyone,

There is no shortage of articles online about the future of code reviews and AI.

In the rush of AI software development, one step remains a silent productivity killer is the code review.

In today’s post I also shared my honest opinion about AI code reviews.

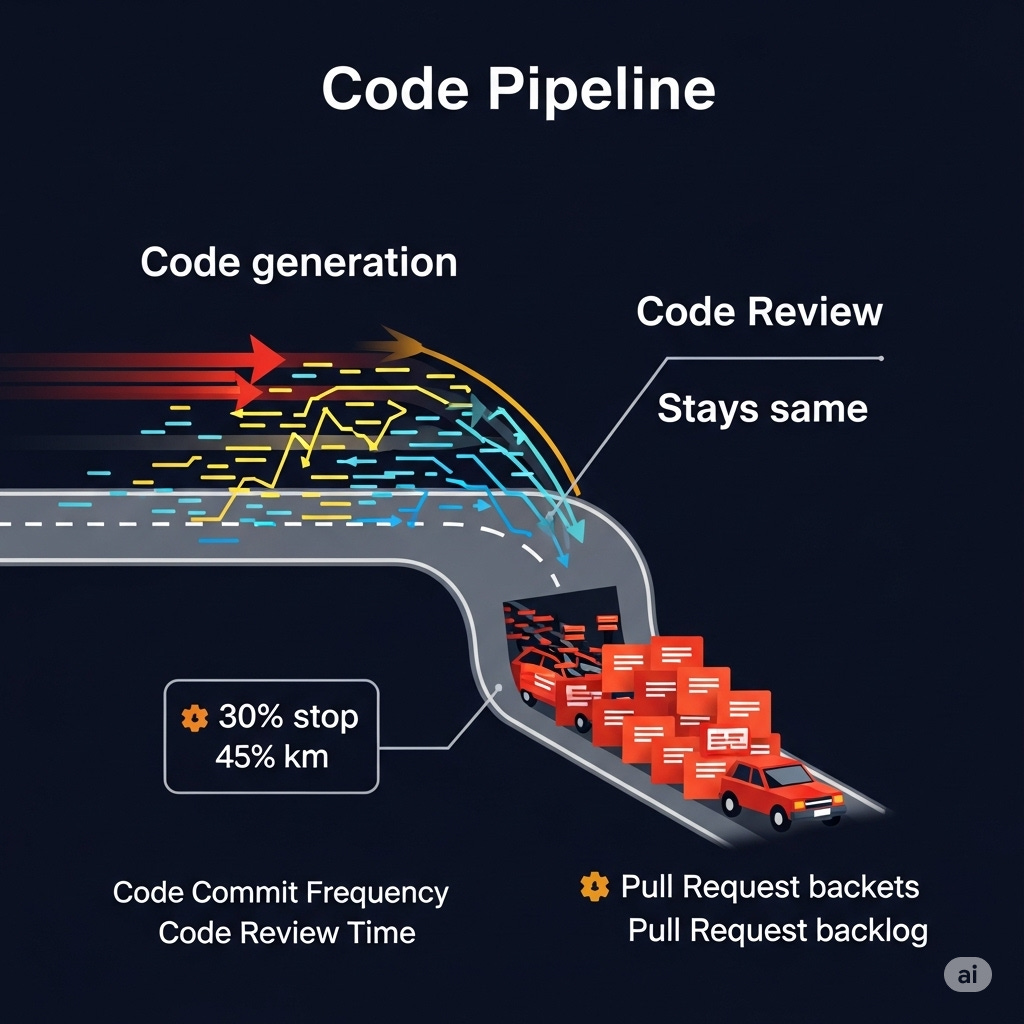

As AI coding assistants like GitHub Copilot and others generate more of our code, traditional peer reviews have become the invisible bottleneck slowing releases.

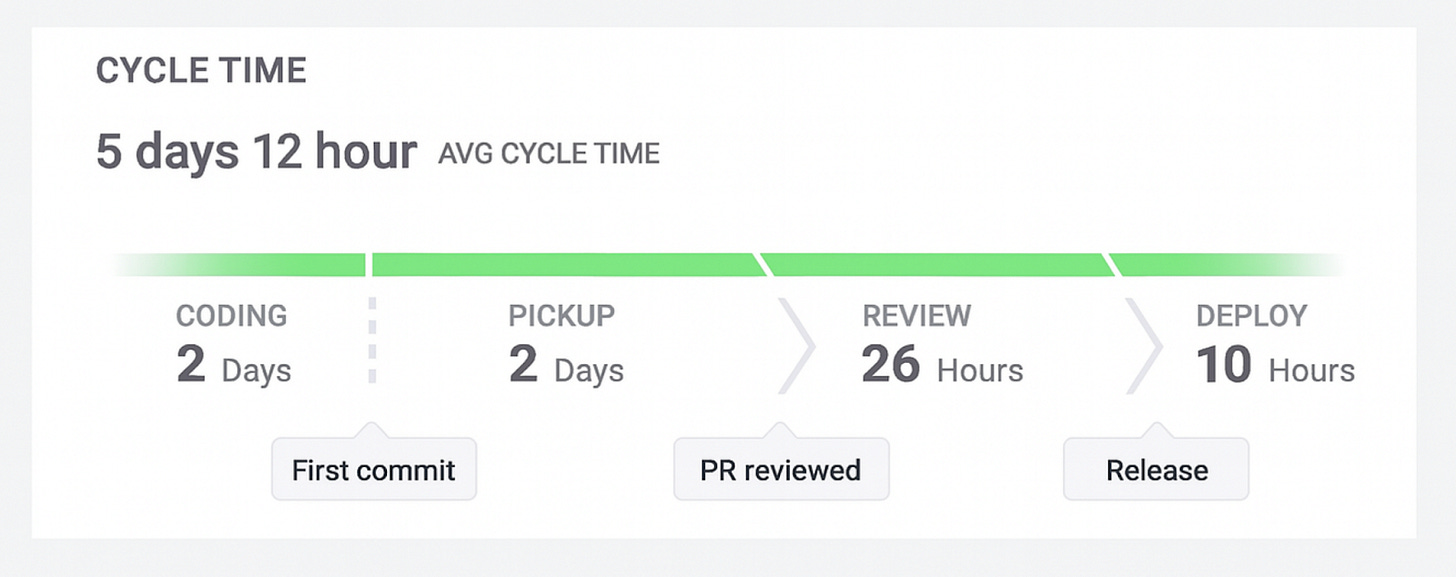

Recent data is eye-opening pull requests often wait an average of 2+ days for a human even to begin reviewing. In fact, an analysis of over 2 million PRs by JellyFish found that half their lifespan is spent simply waiting in review queues. This lag frustrates developers (over half feel blocked by sluggish reviews) and translates to significant productivity loss.

In 2025, that’s precisely the scenario teams face. While AI-powered coding tools are churning out more code in seconds, reviewing this code and getting it into production is another story.

Letting Go of Gatekeeping

Code reviews have long been the gatekeeping ritual of engineering culture. Senior devs vigilantly check each line, debate style nits, and enforce standards before code enters the sacred main branch. This process is rich in knowledge sharing and quality control but also labour-intensive and slow.

Now, with AI reviewers on the scene, teams are challenged to let go of some gatekeeping and embrace automation for the repetitive parts of the review process. There’s understandable skepticism: can an AI truly judge our code? Engineering leaders caution that an AI’s evaluation should be “only a first pass to speed things up,” not the final green light.

Some developers worry about losing the human context and accountability in reviews. After all, an AI won’t know if the requirements changed yesterday or if two teams agreed on a new design in a Zoom call. It might flag excellent code as problematic because it violates a generic rule, or miss a subtle bug that only business context would reveal.

And if we hand over the reviewer’s gavel to a machine, who is responsible for bad calls?

“If you hand over the keys to AI, the chain of responsibility is lost.”

Yet, letting AI into the review process doesn’t mean abandoning human judgment; it means refocusing it. AI tools like CodeRabbit are designed to take the drudgery out of first-pass reviews, catching low-hanging issues so human reviewers can spend their energy on more meaningful feedback.

This shift calls for trust: trust that the AI can handle the repetitive nitpicks and that developers will still step in for the nuanced calls.

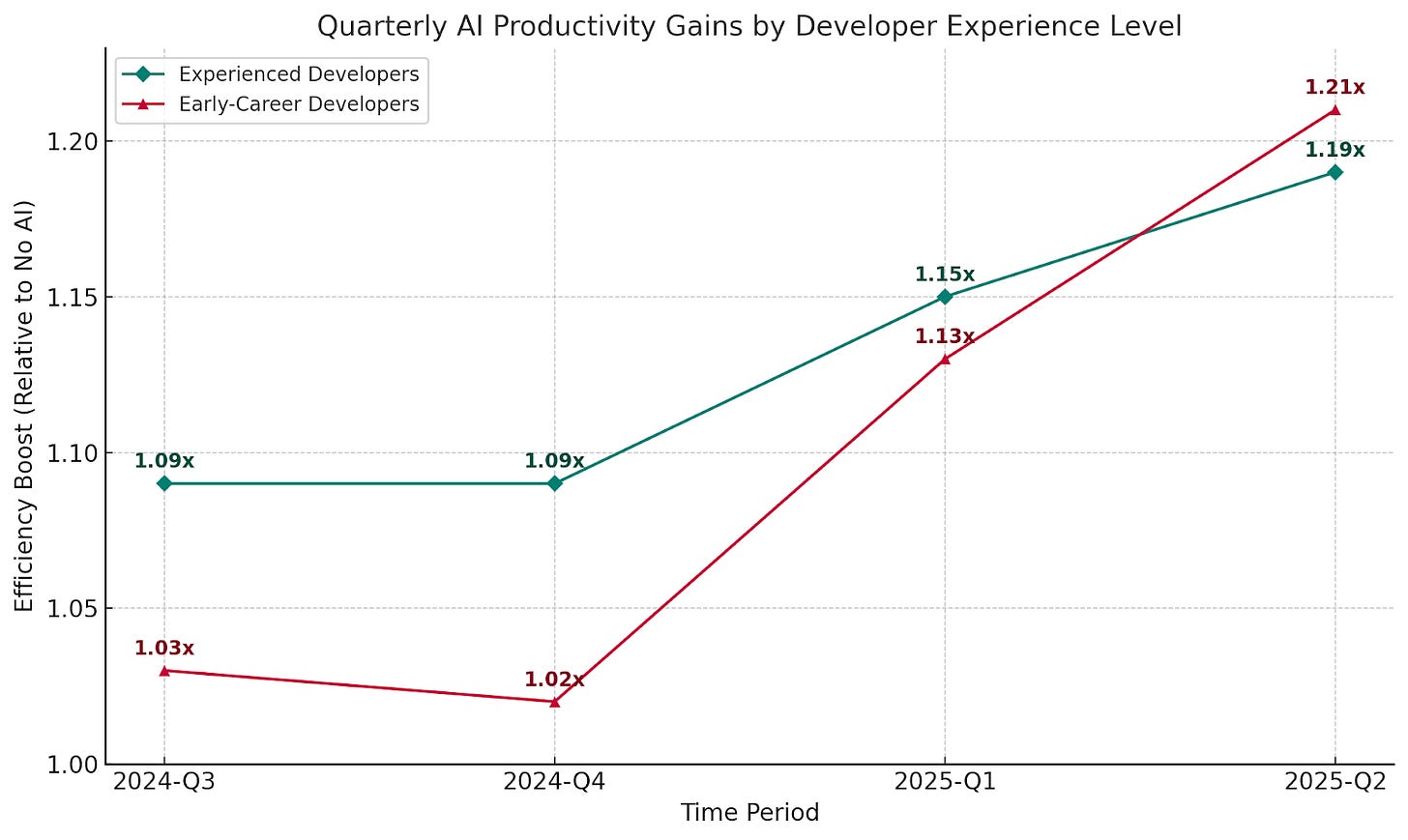

As that trust has grown, so has the impact, reshaping not just workflows but also who benefits most from AI-driven reviews.

While senior engineers initially saw the greatest productivity gains from AI, junior engineers have rapidly closed the gap.

An AI Reviewer Joins the Team

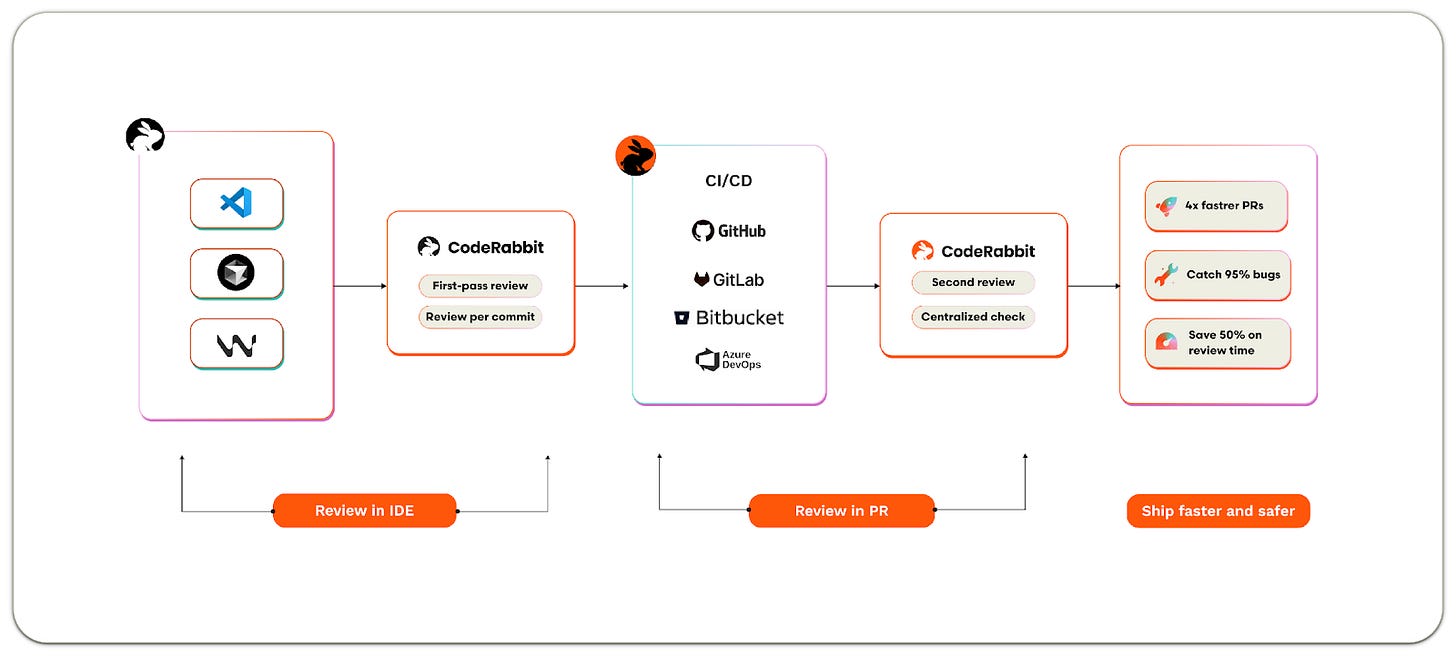

What does it look like when an AI code reviewer steps into your workflow? CodeRabbit offers a telling example. Dubbed “the dream PR reviewer” by early adopters, CodeRabbit integrates directly into platforms like GitHub, Bitbucket, and GitLab to provide instant feedback on pull requests.

CodeRabbit is the most installed AI app both on Github and GitLab.

CodeRabbit has so far reviewed more than 10 million PRs, installed on 1 million repositories, and used by 70 thousand Open-source projects. CodeRabbit is free for all open-source repo's.

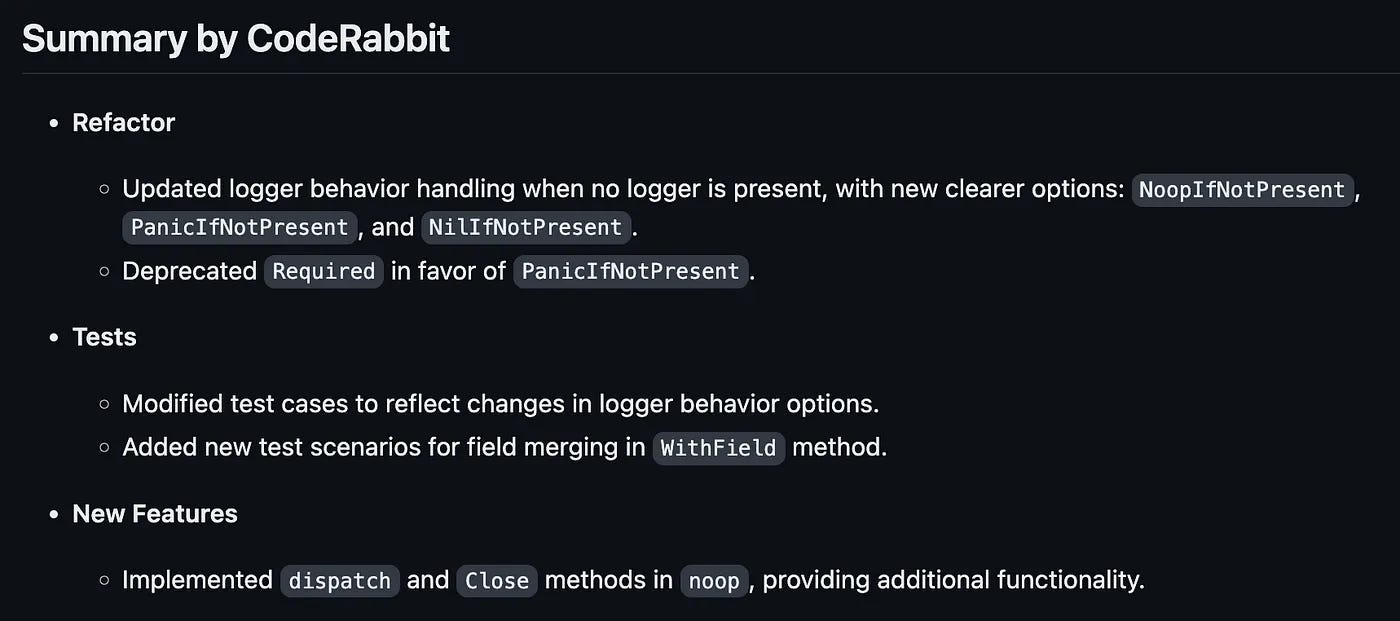

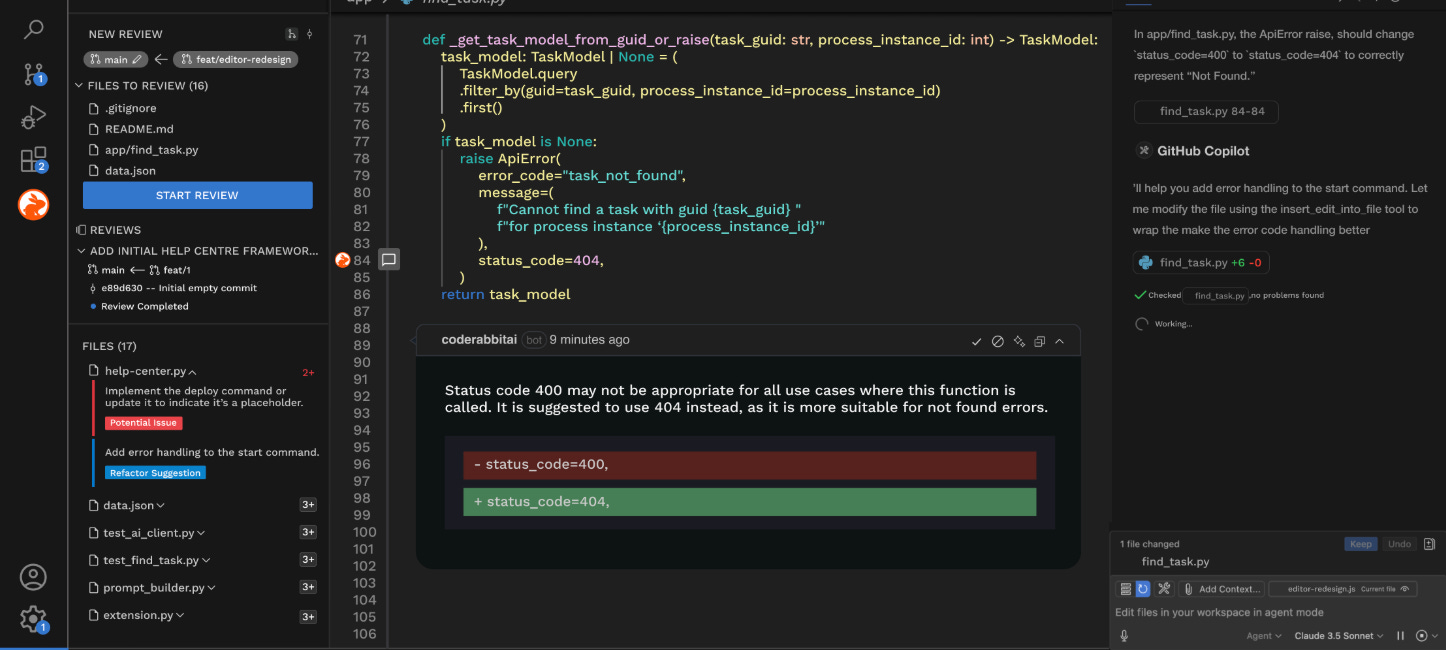

When you open a PR, the AI springs into action, analyzing the code line by line, scanning for bugs, style inconsistencies, security issues, and more. It then posts a detailed review as if it were a human team member, complete with inline comments and summaries of the changes.

One standout feature is the auto-generated PR summary. Instead of dumping a wall of text, the AI concisely explains what the code change does and why it matters. For a busy maintainer glancing at a 500-line diff, this high-level summary is a game-changer - it’s like getting a quick briefing from a diligent colleague.

CodeRabbit can even produce an “expert code walkthrough,” guiding you through the logic of a change step by step. This helps reviewers understand complex submissions faster.

But it doesn’t stop at summarizing. The AI leaves inline comments on specific lines where it spots issues. These range from potential bugs - “This SQL query doesn’t handle null user IDs to code smells like duplicated logic or inefficient loops.

Thanks to advanced static analysis and an AST-based code understanding, it catches things that basic linters might miss, considering the project context and even cross-file impacts.

For example, CodeRabbit’s analysis is codebase-aware: it knows if a change in one module might break an assumption in another, or if a new function violates an architectural rule your team follows. This is beyond what a standard linter does, edging into what a human architect reviewer might notice.

The most novel aspect is the interactive chat. On a CodeRabbit-reviewed PR, developers can actually converse with the AI within the comment thread.

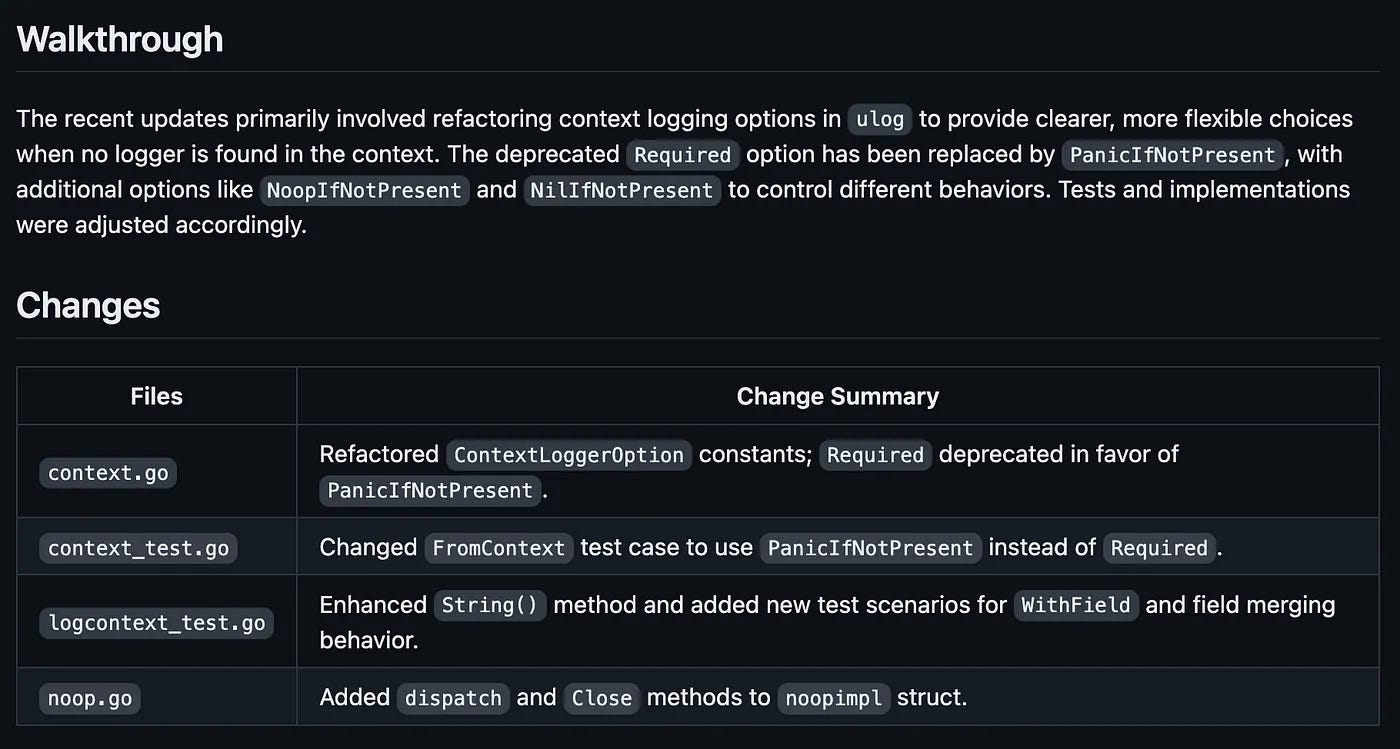

CodeRabbit provides a variety of keyword-based commands that let you control its behavior during a code review, including the following:

Pausing or resuming automated reviews of the pull request.(@coderabbitai pause and @coderabbitai resume)

Manually request a review, when automated reviews are paused. (@coderabbitai full review)

Resolve all open comments authored by CodeRabbit.(@coderabbitai resolve)

Update summary text(@coderabbitai summary)

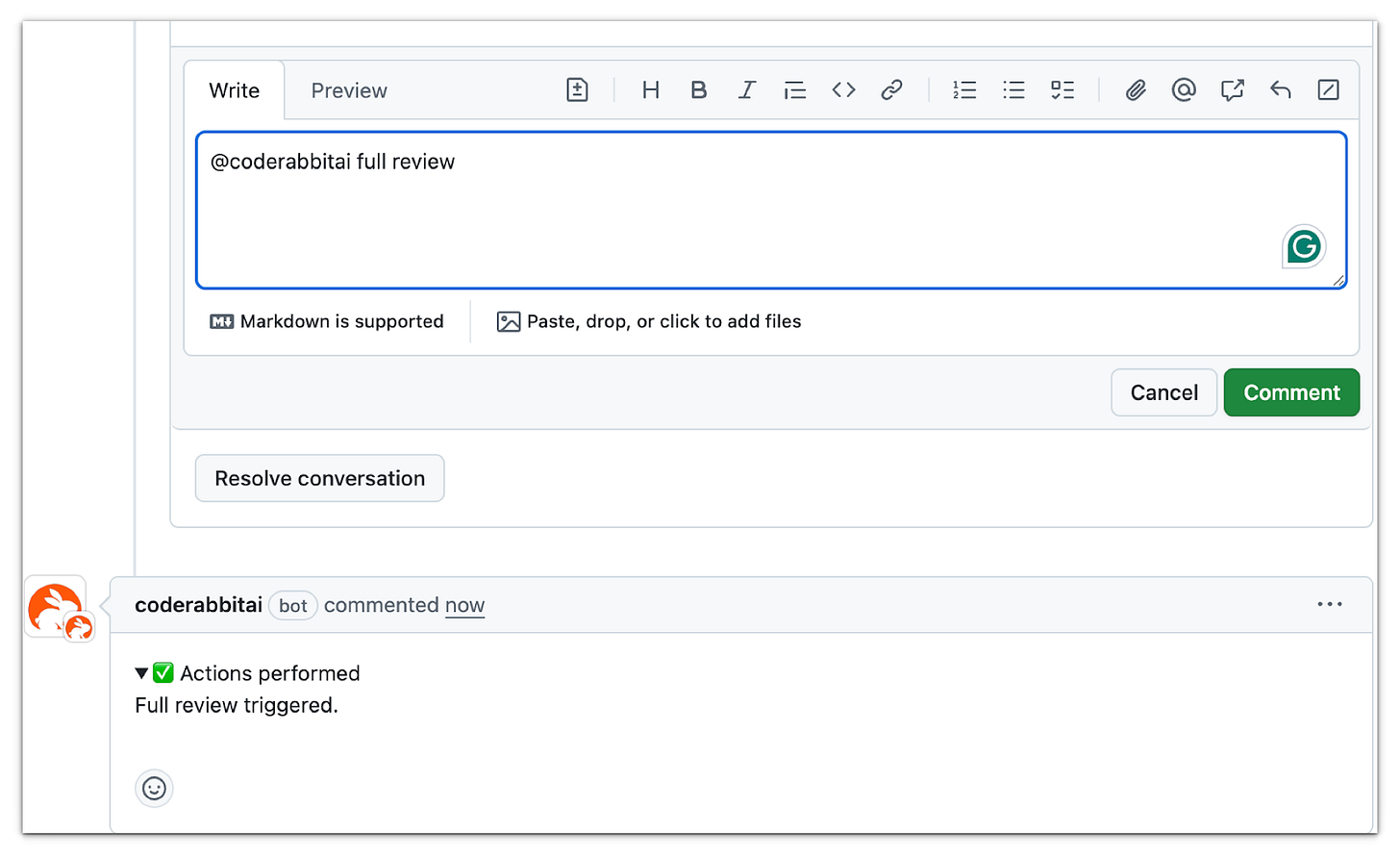

CodeRabbit integrates into developers’ IDEs (e.g. VS Code, Cursor, and Windsurf) for on-the-fly reviews. This means you can review each staged or unstaged commit in your IDE.

It will highlight a problematic snippet and suggest real-time improvements, reducing back-and-forth on the PR later.

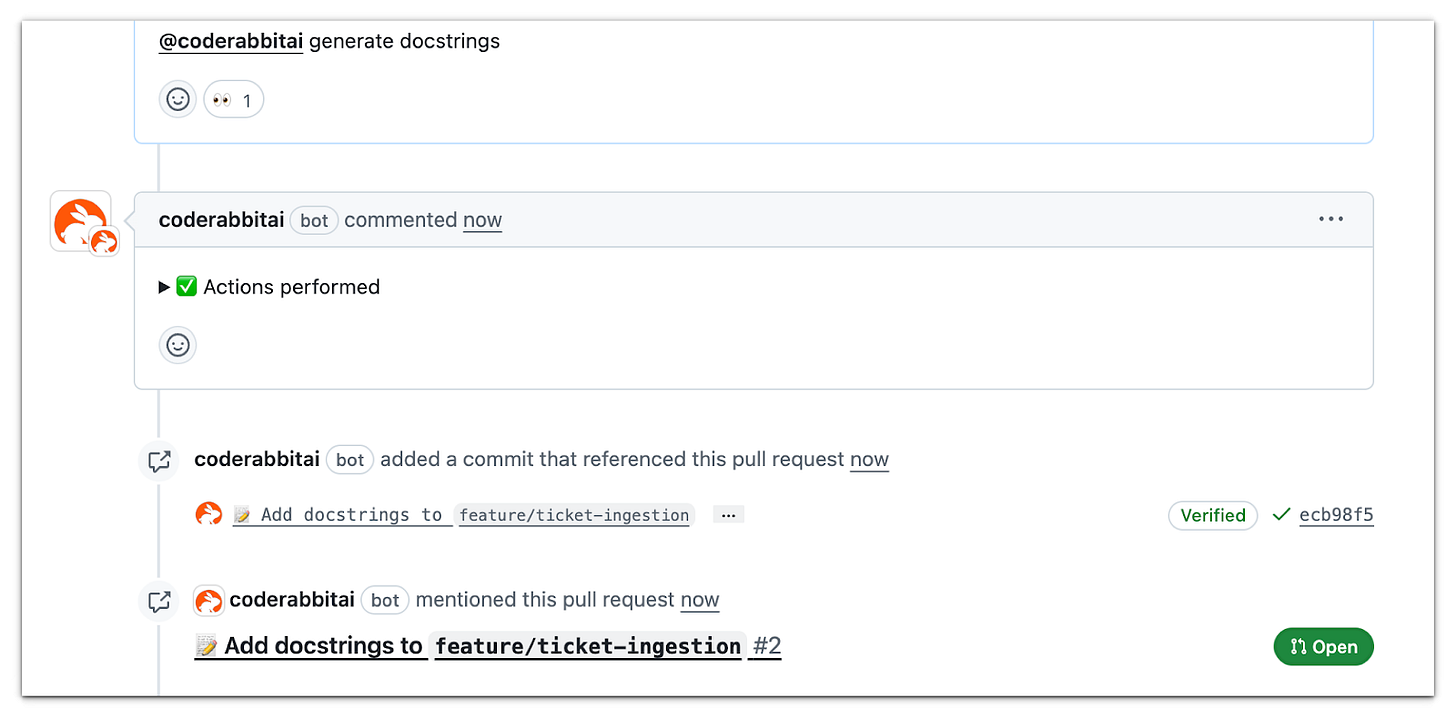

CodeRabbit can now generate docstrings for functions and methods that lack proper documentation. This feature, introduced as part of CodeRabbit’s “Finishing Touches” initiative, augments the code review process by ensuring documentation is not overlooked.

Once a pull request’s code changes are ready and initial reviews are done, a developer or reviewer can simply comment @coderabbitaigenerate docstrings on the pull request.

Faster Merges, Better Code- The Payoffs

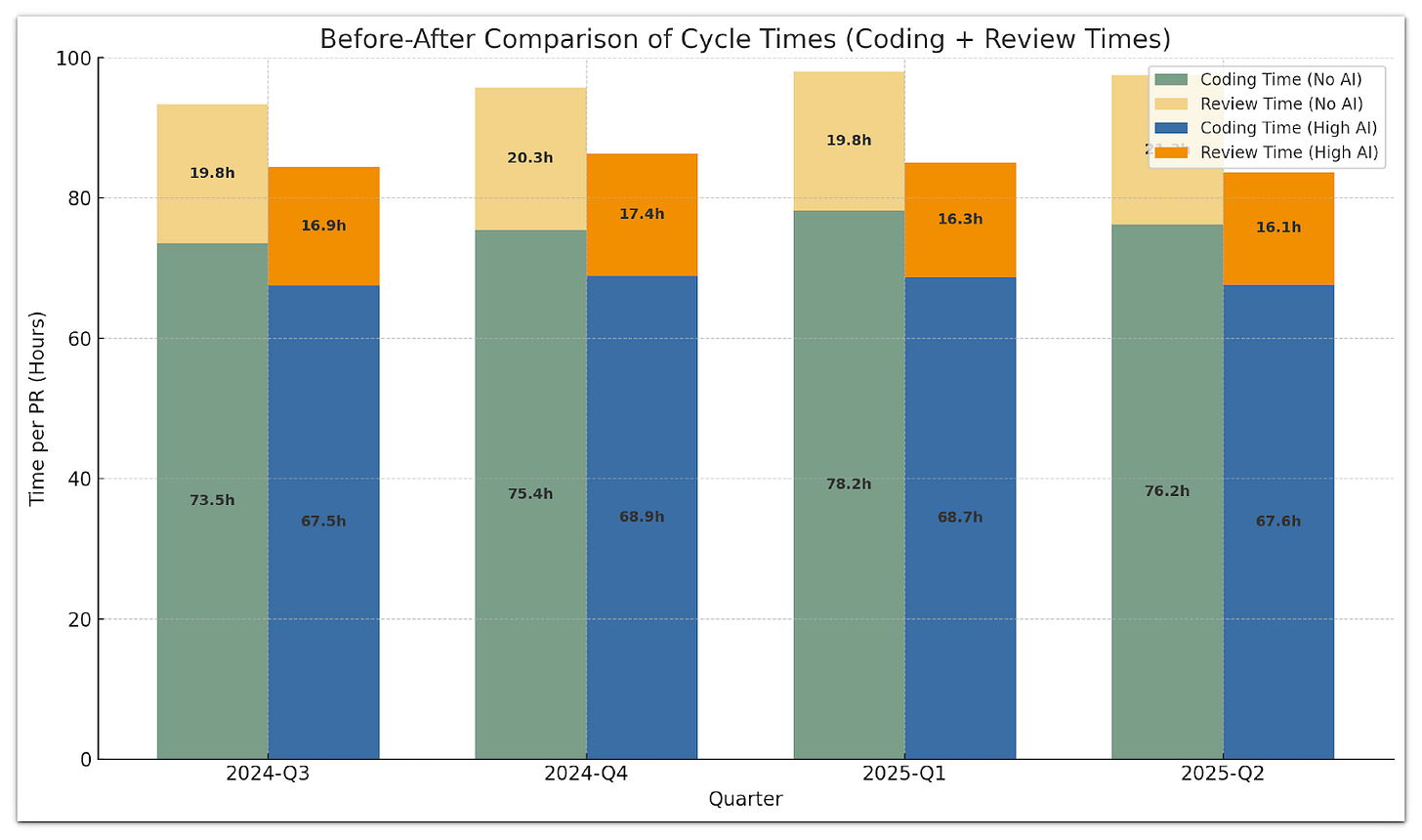

Early adopters report that AI code reviewers have supercharged their development workflow. One big win is speed: Teams merge pull requests much faster when an AI handles the initial review pass. Internal benchmarks from CodeRabbit claim that using AI cuts manual review time by 50% on average.

Similarly, a study found that automated review tools led to 89% faster merge times in some cases. These gains come from reducing the idle time waiting for reviewers and streamlining the review itself when a human picks up a PR that already has an AI-written summary and identified fixes, the review that used to take an hour might be done in 15 minutes.

It’s not just about velocity; code quality is seeing improvements, too. AI reviewers don’t get tired of or skim code. They diligently check every line against a vast corpus of knowledge. CodeRabbit, for example, claims to catch roughly twice as many defects as traditional manual reviews in their trials.

Another benefit is consistency. Human reviewers each have personal habits and areas of focus - one might be strict about documentation, another cares deeply about error handling. An AI reviewer always applies the same standard, guided by the organization’s rules.

Not So Fast - Where AI Falls Short

Despite the remarkable benefits, AI code reviewers are no silver bullet. 2025’s tools, as advanced as they are, come with limitations and caveats that engineering teams must understand. Accuracy and noise are one concern. While CodeRabbit touts a 95% bug detection rate on familiar patterns, that applies to known issues - the AI might still miss novel bugs or complex logic errors that don’t match anything in its training.

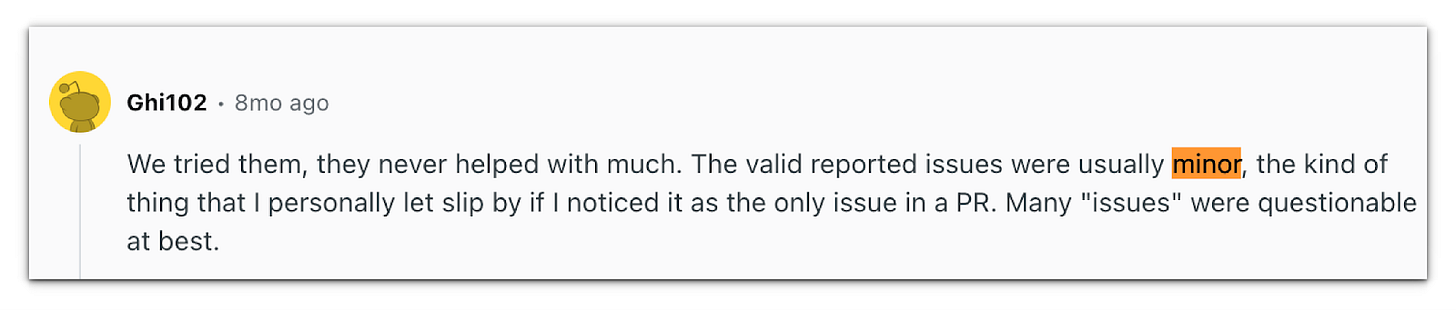

On the other hand, it can raise false alarms: some developers report that many “issues” flagged by AI were questionable or minor to the point of not truly needing a fix.

This noise can create alert fatigue, where developers tune out the AI’s comments if they feel too generic. Striking the right balance is key; for instance, tools like Devlo or others aim to prioritize only high-impact comments to avoid a wall of text in every review.

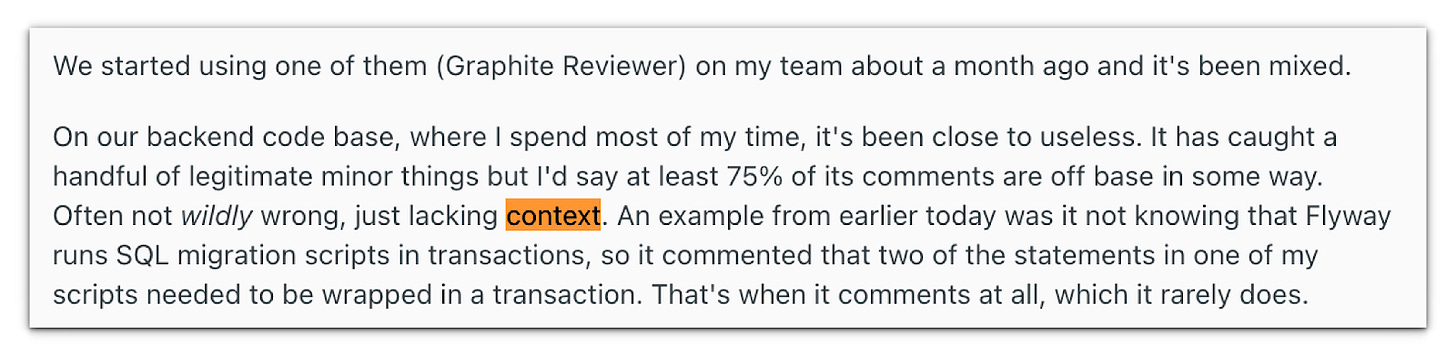

Context blindness is another challenge. An AI works with the information it’s given – typically the code diff, some surrounding code, maybe the project docs. It doesn’t inherently know the unwritten context: the business rationale, the ticket conversation, the future roadmap.

AI often lacks judgment. It can’t gauge risk vs. reward the way a human can, or decide that a deviation from best practice is acceptable given deadline pressures or one-off use cases.

There’s also the matter of human collaboration and mentorship. Code review isn’t solely about finding bugs; it’s a social process where team members learn from each other and build consensus on code decisions. An AI reviewer won’t join your team's Zoom call to discuss an alternative approach, nor will it patiently wait for someone’s input before deciding on a solution.

It won’t pick up on the emotional undercurrents, say, a junior dev who needs a confidence boost, or a situation where code quality might be intentionally a bit lower for a quick prototype. Relying entirely on AI could mean losing these human elements.

Another practical limitation is trust and accountability. Many organizations are understandably cautious about letting an AI have too much authority in the development cycle. If an AI advises merging a piece of code that later causes an outage, who takes responsibility?

Vendors like CodeRabbit emphasize privacy (with features like ephemeral analysis environments and end-to-end encryption, but companies in sensitive domains still have to vet these tools for compliance.

Augmenting, Not Replacing - The Road Ahead

Bringing AI into code review is prompting a cultural shift in software teams. By automating the tedious parts of reviews, we’re effectively saying that a chunk of what used to be a developer’s job can be offloaded to machines. This doesn’t diminish the role of developers - instead, it elevates it.

Freed from chasing missing semicolons or arguing over minor stylistic choices, human reviewers can focus on what truly matters.

Is this the right approach for our system? Are we handling all the edge cases? Is there a simpler design? No AI can fully answer these questions because they require insight into the project's ever-evolving human context.

The best teams of 2025 treat AI reviewers as partners. The AI handles repetitive tasks with superhuman consistency, while the humans make creative, complex, and value-laden decisions.

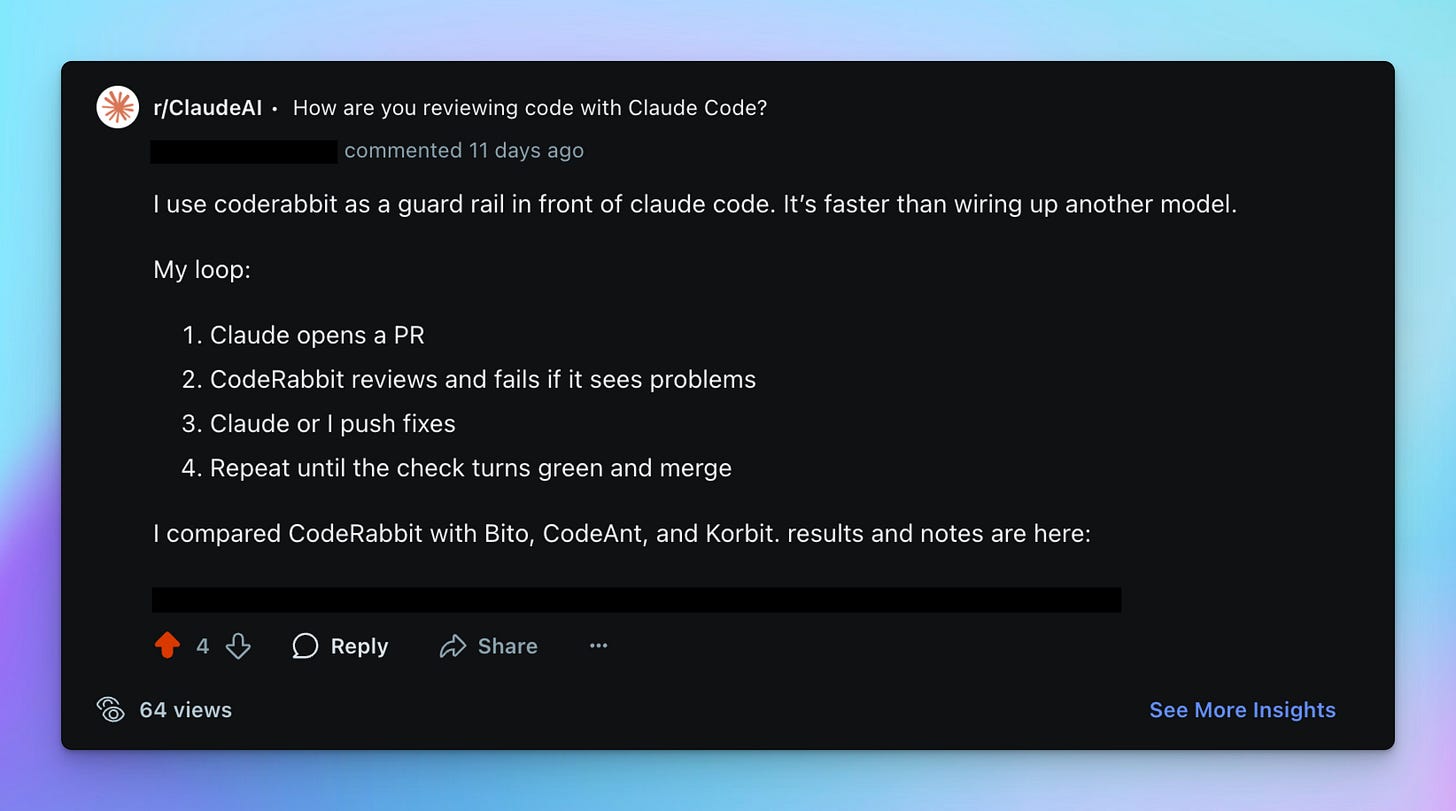

My loop to use AI as a code reviewer:

The outlook is exciting. As investment pours into this space (over $100 billion in AI companies in 2024 alone). Early hints of this are already here; tools under development aim to review not just code diffs, but also UI changes, configuration, and even performance metrics in a truly holistic approach.

AI might also become more agentic, pointing out problems and taking action by automatically opening follow-up pull requests or running test suites. For instance, CodeRabbit’s roadmap hints at deeper integrations (imagine an AI that can create a JIRA ticket for a needed follow-up or directly commit a suggested fix after tests pass).

Conclusion

AI code review tools in 2025 are transforming the software development process by breaking the old bottlenecks and enabling a new level of productivity.

Teams that embrace these tools thoughtfully, using them to catch the easy stuff and inform the hard stuff, find they can move fast without breaking things.

The key is balance. Use the AI’s strength in consistency and speed, but apply human wisdom where judgment is needed.

The code review of the future isn’t a human or an AI affair. It’s a collaboration.

drop your thoughts in the comments box and let’s discuss.

That’s a wrap for this edition.